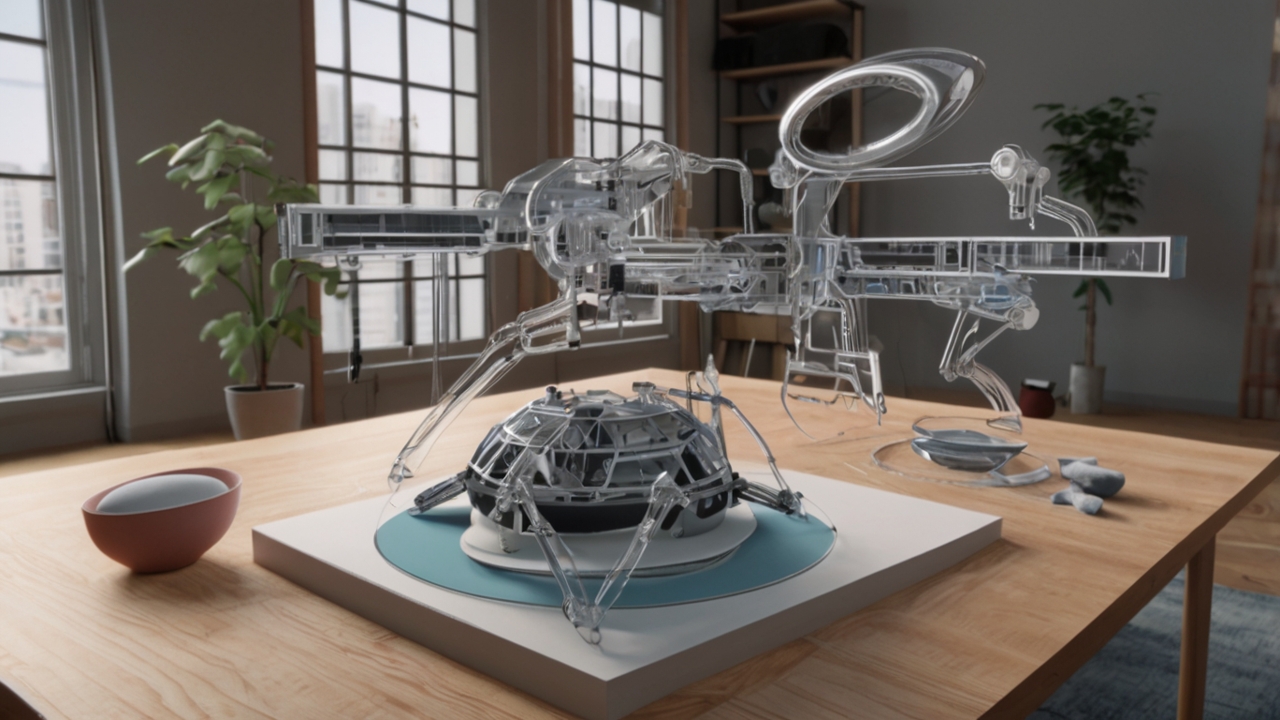

Mayumiotero – In the fast-evolving world of augmented reality (AR), Realistic Simulated Materials have become the cornerstone of visual authenticity. Unlike early AR systems that relied on flat textures, modern AR environments simulate light, reflection, and surface depth with near-photographic precision. This innovation bridges the gap between digital projections and real-world perception. As AR becomes a part of daily life from retail previews to architectural visualization creating believable materials is no longer a luxury but a necessity. The demand for realism has pushed developers and designers to focus not only on aesthetic accuracy but also on how materials behave under different lighting conditions and angles.

“Read also: Wiz Warns AI Firms: Speed Shouldn’t Come at the Expense of Security“

Why Realism Matters in AR Experiences

Realistic materials in AR can dramatically enhance immersion. When a digital object mimics the texture of wood, glass, or metal with authentic reflections and shadow behavior, users perceive it as part of their environment. This illusion builds emotional connection and trust two key drivers of adoption in commercial AR applications. Personally, I believe realism in AR is about more than visual beauty; it’s about presence and believability. If a customer can preview furniture with lifelike surfaces in their living room, they are far more likely to trust their purchase decision.

How Material Simulation Works Behind the Scenes

The science of Realistic Simulated Materials involves physics-based rendering (PBR) and advanced light modeling. PBR calculates how light interacts with a surface based on material properties like roughness, reflectivity, and albedo. Combined with real-time ray tracing, AR platforms can now reproduce the subtleties of translucence, gloss, or grain. These simulations often rely on machine learning algorithms trained on massive datasets of real-world materials. This hybrid approach combining physics and AI ensures that AR objects look natural even under unpredictable environmental lighting.

The Role of AI in Enhancing Material Accuracy

Artificial intelligence plays a transformative role in achieving realism at scale. AI models can analyze photographs of materials and automatically generate 3D texture maps, reducing manual effort for designers. Moreover, AI can adapt material behavior dynamically, adjusting reflectivity and hue based on surrounding light conditions. This adaptability is what makes modern AR previews so convincing. I find it fascinating how AI has evolved from a supporting tool into the primary engine that drives realism in visual simulations.

Industry Applications: From Retail to Architecture

Realistic materials are revolutionizing multiple industries. In retail, AR-powered try-before-you-buy experiences now display products with authentic surface textures, from glossy ceramics to matte leather. In architecture, clients can visualize interiors with true-to-life finishes, enabling faster decision-making. Even automotive companies use realistic AR materials to showcase paint finishes and interiors. These examples prove that AR realism is no longer confined to entertainment it has become a core part of commerce and design strategy.

“Read more: Moonshot AI’s Kimi K2 Thinking Model Redefines the Future of Artificial Intelligence“

The Challenge of Balancing Realism and Performance

Despite impressive progress, rendering realism in AR is computationally expensive. High-fidelity materials require detailed texture maps, dynamic lighting, and real-time reflection calculations. This poses a challenge for smartphones and AR glasses with limited processing power. Developers must balance visual quality with performance optimization through texture compression, adaptive rendering, and cloud-based processing. In my view, this balance defines the success of AR adoption because even the most realistic preview loses impact if it lags or overheats the device.

Future Trends: Towards Hyperreal AR Environments

Looking ahead, the next generation of Realistic Simulated Materials will integrate neural rendering and spatial mapping for even deeper immersion. Soon, AR applications will not only simulate how materials look but also how they feel through haptic feedback systems. Combined with 6DoF (six degrees of freedom) tracking, users will experience AR environments that blend seamlessly with physical spaces. As technology matures, the boundary between reality and simulation will blur to the point of invisibility.

Human Perception and the Psychology of Realism

Ultimately, realism in AR is as much about psychology as it is about technology. Our brains respond to familiar visual cues light diffusion, texture consistency, and reflection symmetry. When these cues align, the brain accepts virtual objects as real. This interplay between cognitive science and digital design defines the success of AR visualizations. Personally, I believe the beauty of Realistic Simulated Materials lies not just in their technical perfection but in their ability to evoke human emotion through illusion.